NeuBahar Lab is based in the Electrical and Computer Engineering Deparment at the University of Alberta. NeuBahar is pronounced noʊ-bæˈhɑːr, meaning "new spring"- a poetic reference to renewal, growth, and the arrival of spring.

Our research broadly covers machine learning, representation learning, generative models, world models, inverse problems, interpretability, and NeuroAI. We study generative models, focusing on interpretable representation learning and the development of world models that are physically grounded and controllable. Our research explores how active inference and principled architectures can make intelligent systems mechanistically interpretable, and form representations that are disentangled and compositional.

Our research is inspired by the brain: A core challenge in neuroscience and AI is understanding how intelligent systems perceive and interpret the world from incomplete or noisy data. Humans, for instance, can robustly recognize visual scenes or sounds even under significant noise or occlusion. The Bayesian brain hypothesis posits that the brain achieves this by treating perception as an active inference, integrating sensory information with internal models to deduce the most likely explanation. However, how the brain forms internal representations that are meaningful, interpretable, robust, and generalizable from noisy inputs remains unknown. What kind of internal world model does the brain construct, and how is it learned? Addressing this grand challenge in neuroscience also informs AI: we seek intelligent systems capable of reliably learning generalizable world models. Our research is guided by two fundamental questions:

- What learning architectures allow AI systems to perform mechanistically interpretable and robust inference?

- What representations should AI systems learn? Which structural properties of internal representations make them interpretable, understandable by humans, or aligned with meaningful physical variables?

Perception: To perceive is to build a generative model of the world that allows one to resolve ambiguity, correct errors, and infer missing information when sensory input is incomplete or inconsistent.

What are inverse problems? Scientists and engineers study systems in neuroscience, physics, biology, or engineering by taking measurements and seek to infer the underlying representation (cause) or system properties that generated them. Inverse problems are ill-posed: multiple hidden causes may explain the same observations (effect), especially when data are noisy or incomplete. Without additional constraints or prior knowledge, the solution might be indeterminate or unstable. Solving an inverse problem involves combining evidence from data with prior knowledge to infer the most likely explanation. Understanding how biological systems, like the brain, perform this integration can guide the design of more interpretable and effective AI systems.

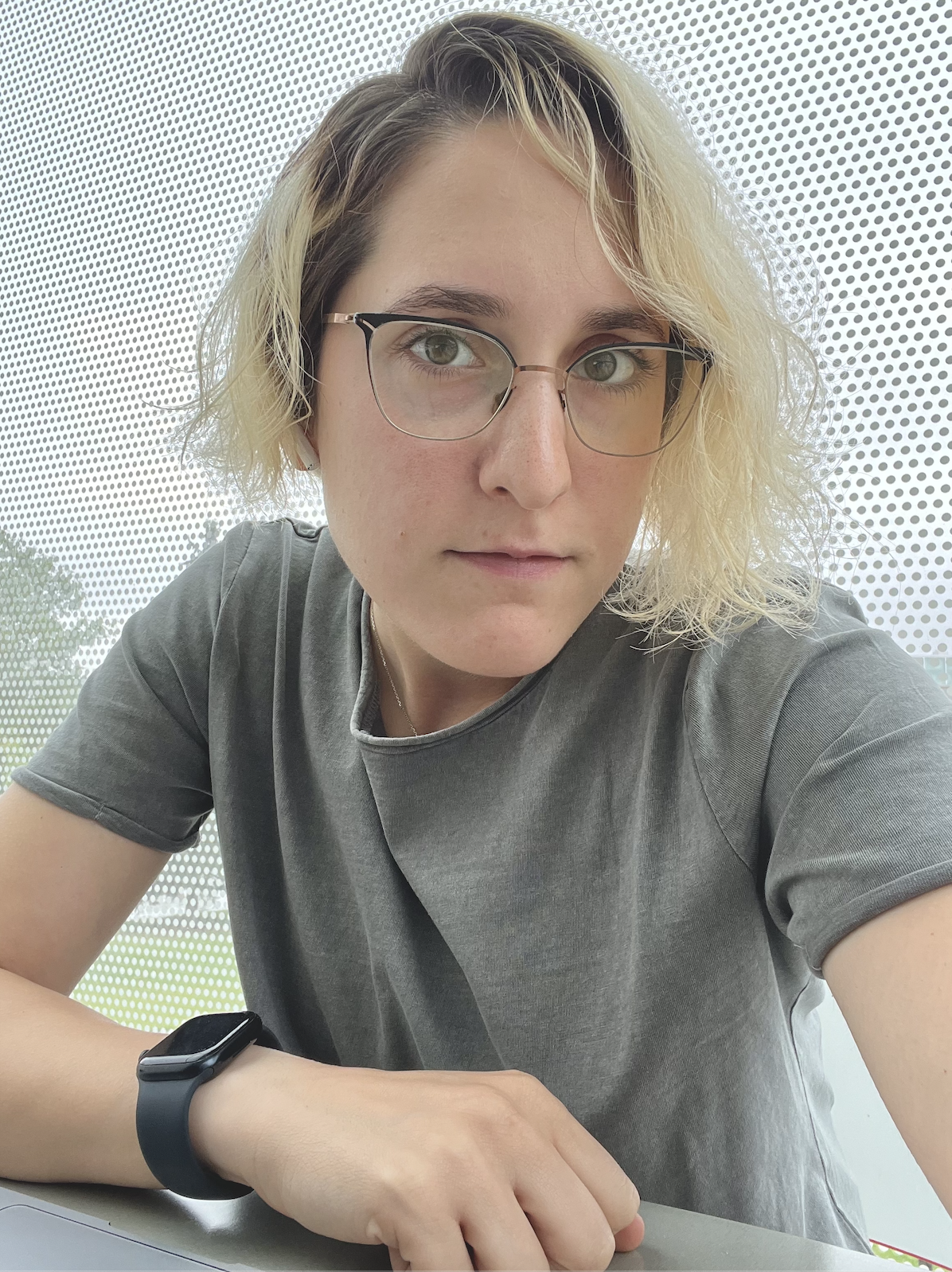

Principal Investigator

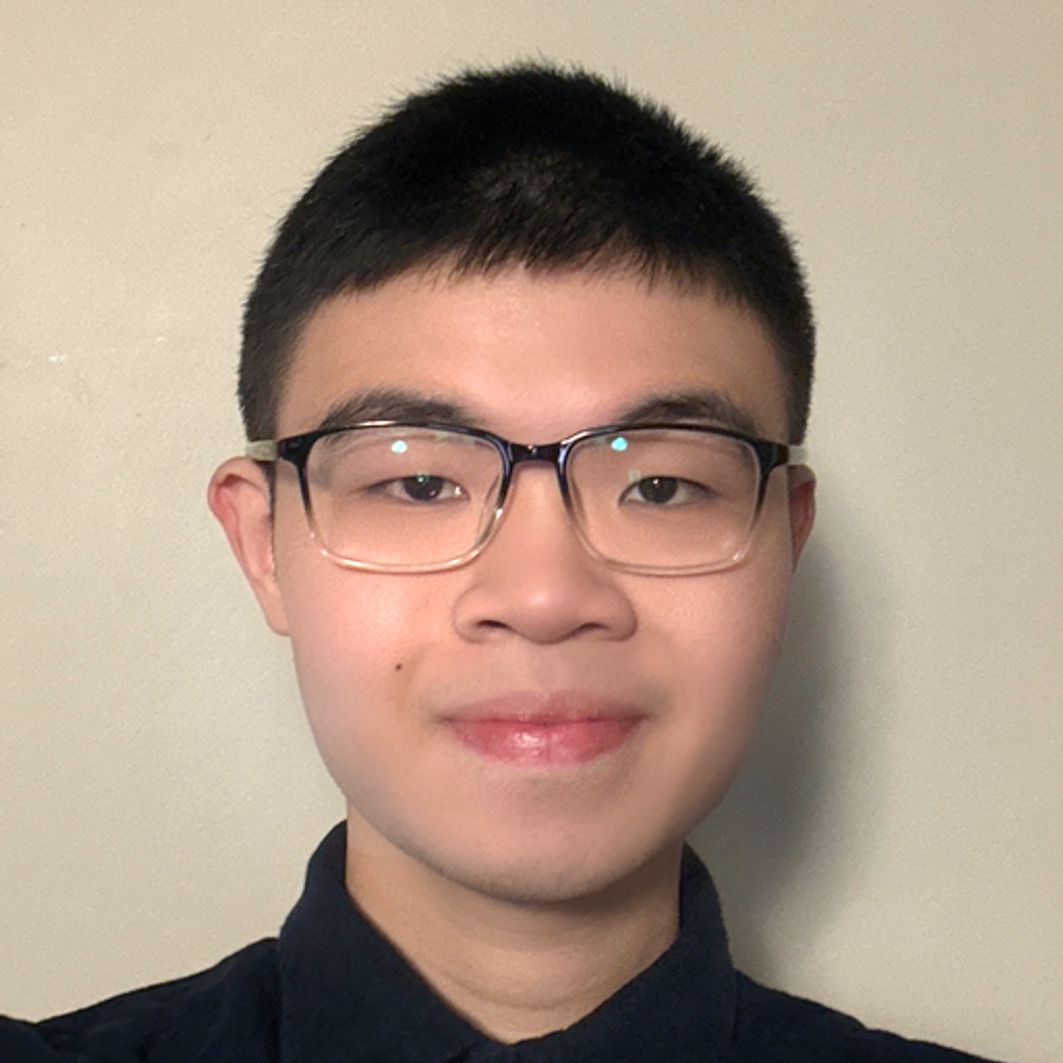

PhD Student

MSc Student (visiting)

Incoming MSc Student (Fall 2026)

Visiting Researcher

Undergraduate Researcher

Undergraduate Researcher

-

Ailsa Shen (remotely visiting undergraduate student from Caltech) - Feb. 2025 to present -

Valérie Costa (remotely visiting master student from EPFL) - Oct. 2024 to Aug. 2025

-

Siddhesh Salphale (visiting undergraduate student from IIT kharagpur) - Dec. 2024 to Aug. 2025 -

Luca Ghafourpour (visiting master student from ETH) - Jan. 2025 to Aug. 2025 -

Aditi Chandrashekar (visiting undergraduate student from Caltech) - Sept. 2023 to May 2025 -

Rayhan Zirvi (visiting undergraduate student from Caltech) - Feb. 2024 to May 2025 -

Sanvi Pal (visiting undergraduate student from Caltech) - June to Dec. 2024 -

Bobby Wang (visiting undergraduate student from Caltech) - Feb. to Dec. 2024 -

Freya Shah (visiting undergraduate student from Ahmedabad University) - June to Aug. 2024 -

Austin Wang (visiting undergraduate student from Caltech) - July to Dec. 2023